Recently I have been reading about texture generation and interpolation, and I thought it’d be something interesting if we could generate textures with GANs. Ideally, similar to the GauGAN work, we can specify the layout of an image and let the GAN fill out the textures.

There’re a few papers on texture synthesis out there, but this one called Spatial GAN caught my eye - mostly because of the simplicity of it. It’s an earlier GAN paper and the architecture and training still mostly follows DCGAN. However, the architecture is fully convolutional, and can be used to generate different sizes of texture images at test time.

So, let’s give it a try!

Let’s start with this single texture from the DTD dataset, and use random crops from it to train the GAN:

The first architecture I have is quite shallow: the crops from the real texture is 64x64, and the input noise to the generator has spatial dimension 16x16. The generator only scales up the noise 4x (per side), from 16x16 to 64x64.

These are some textures generated by this model, with size 64x64:

Just for fun, I mess with the standard deviation when sampling the noise for the generator. We can see the texture goes from smooth to having more constrast, with increasing stddev:

And, most crucial of all, how about the promise that we could generate texture of different sizes? Let’s make something large.

Well, that’s not bad, but due to how shallow the networks are, the structure of the original texture (fabric-like grids) is not captured. Let’s try again with deeper networks. This time, the noise has spatial dimensions 4x4, and scale up 16x. The result:

Looks like a nice piece of fabric :)

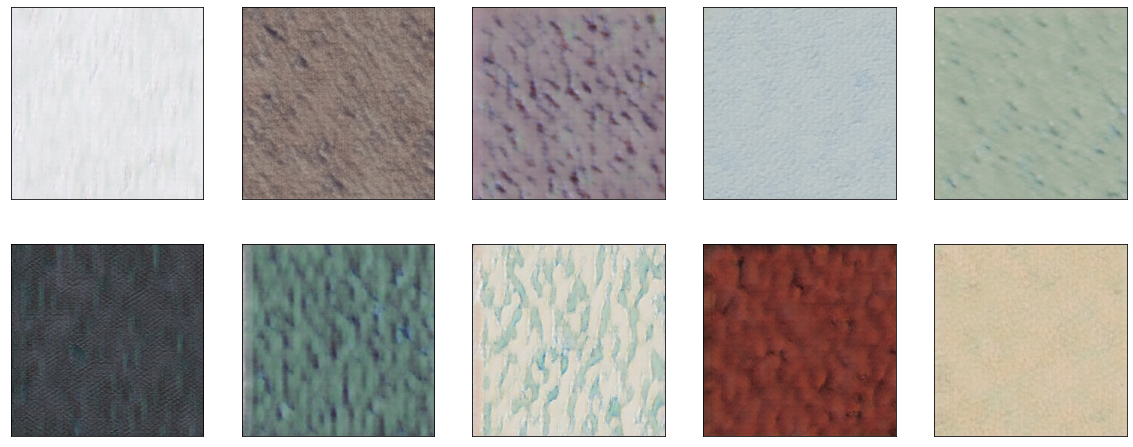

With more textures in the training set, we can also generate a range of textures. To map different textures into different latent space, instead of directly sampling the noise from a normal distribution, I separate the sampling process into two steps. First, a “texture type” is sampled from a normal distribution. This noise vector is repeated spatially (since the same texture is repeated through out the image). Then, we use the “texture type” as mean to sample from another Gaussian, with smaller standard deviation. This time, all spatial vectors are sampled independently.

And there we go:

A few thoughts:

- Following the recommendations in the DCGAN paper, it was pretty straightforward to get something working (as long as you don’t forget to normalize the value range of the real images like me ;P)

- There were a few trials where the discriminator was overpowering and winning all the time (loss of the discriminator stays at ~0). Keeping the generator and the discriminator “mirrored” of each other works pretty well.

- Generating larger textures (than the size used in training) doesn’t always work well. It depends on the texture’s global structure and also the network architecture.

- In training, using at least 8x8 at the top of the network makes the results better when generating larger images at test time. It might be due to the way the network is padded, and having a larger non-edge area in training helps the network learn how to repeat similar content spatially.

Code for training the texture GAN can be found here.